This tutorial will introduce you to configuring Storage on Openshift and use it for building stateful applications

By default, OpenShift/Kubernetes containers don’t store data persistently. In practice, when you start an a container from an immutable Docker image Openshift will use an ephemeral storage, that is to say that all data created by the container will be lost when you stop or restart the container. This approach works mostly for stateless scenarios; however for applications, like a database or a messaging system need a persistent storage that is able to survice the container crash or restart.

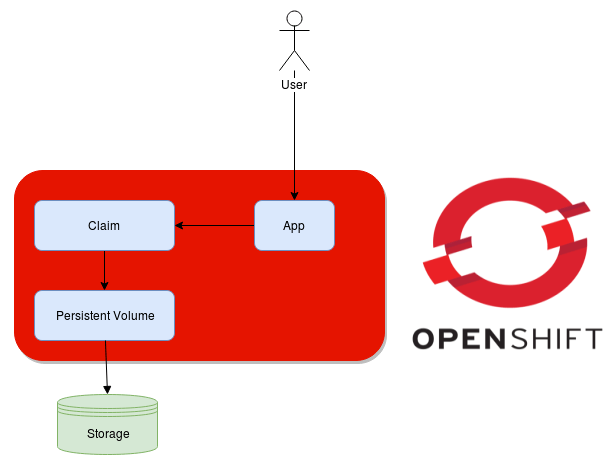

In order to do that, you need an object called PersistentVolume which is a storage resource in an OpenShift cluster that is available to developers via Persistent Volume Claims (PVC).

A Persistent Volume is shared across the OpenShift cluster since any of them can be potentially used by any project. On the other hand, a Persistent Volume Claim is a kind of resources which is specific to a project (namespace).

Behind the hoods, when you create a PVC, OpenShift tries to find a matching PV based on the size requirements, access mode (RWO, ROX, RWX). If found, the PV is bound to the PVC and it will not be able to be bound to other volume claims.

Persistent Volumes and Persistent Volume Claims

So to summarize: a PersistentVolume OpenShift API object which describes an existing storage infrastructure (NFS share, GlusterFS volume, iSCSI target,etc). A Persistent Volume claim represents a request made by an end user which consumes PV resources.

Persistent Volume Types

OpenShift supports a wide range of PersistentVolume. Some of them, like NFS, you probably already know and all have some pros and cons :

NFS

Static provisioner, manually and statically pre-provisioned, inefficient space allocation

Well known by system administrators, easy to set up, good for tests

Supports ReadWriteOnce and ReadWriteMany policy

Ceph RBD

Can provision dynamically resources, Ceph block devices are automatically created, presented to the host, formatted and presented (mounted into) to the container

Excellent when running Kubernetes on top of OpenStack

Ceph FS

Same as RBD but already a filesystem, a shared one too

Supports ReadWriteMany

Excellent when running Kubernetes on top of OpenStack with Ceph

Gluster FS

Dynamic provisioner

Supports ReadWriteOnce

Available on-premise and in public cloud with lower TCO than public cloud providers Filesystem-as-a-Service

Supports Container Native Storage

GCE Persistent Disk / AWS EBS / AzureDisk

Dynamic provisioner, block devices are requested via the provider API, then automatically presented to the instance running Kubernetes/OpenShift and the container, formatted etc

Does not support ReadWriteMany

Performance may be problematic on small capacities ( <100GB, typical for PVCs)

AWS EFS / AzureFile

Dynamic provisioner, filesystems are requested via the provider API, mounted on the container host and then bind-mounted to the app container

Supports ReadWriteMany

Usually quite expensive

NetApp

dynamic provisioner called trident

supports ReadWriteOnce (block or file-based), ReadWriteMany (file-based), ReadOnlyMany (file-based)

Requires NetApp Data OnTap or Solid Fire Storage

Two kinds of Storage

There are essentially two types of Storages for Containers:

✓ Containerâ€ready storage: This is essentially a setup where storage is exposed to a container or a group of containers from an external mount point over the network. Most storage solutions, including SDS, storage area networks (SANs), or networkâ€attached storage (NAS) can be set up this way using standard interfaces. However, this may not offer any additional value to a container environment from a storage perspective. For example, few traditional storage

platforms have external application programming interfaces (APIs), which can be leveraged by Kubernetes for Dynamic Provisioning.

✓ Storage in containers: Storage deployed inside containers, alongside applications running in containers, is an important innovation that benefits both developers and administrators. By containerizing storage services and managing them under a single management plane such as Kubernetes, administrators have fewer housekeeping tasks to deal with, allowing them to focus on more valueâ€added tasks. In addition, they can run their applications and their storage platform on the same set of infrastructure, which reduces infrastructure expenditure.

Developers benefit by being able to provision application storage that’s both highly elastic and developerâ€friendly. Openshift takes storage in containers to a new level by integrating Red Hat Gluster Storage into Red Hat OpenShift Container Platform — a solution known as Containerâ€Native Storage.In this tutorial we will use a Container-ready storage example that uses an NFS mount point on “/exports/volume1”

Configuring Persistent Volumes (PV)

To start configuring a Persistent Volume you have to switch to the admin account:

$ oc login -u system:admin

We assume that you have available an NFS storage at the following path: /mnt/exportfs

The following mypv.yaml provides a Persistent Volume Definition and a related Persistent Volume Claim:

kind: PersistentVolume

apiVersion: v1

metadata:

name: mysql-pv-volume

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/mnt/exportfs"

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql-pv-claim

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

You can create both resources using:

oc create -f mypv.yaml

Let’s check the list of Persistent Volumes:

$ oc get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE mysql-pv-volume 10Gi RWO Recycle Available

As you can see, the “mysql-pv-volume” Persistent Volume is included in the list. You will also find a list of pre-built Persistent Volumes.

Using Persistent Volumes in pods

After creating the Persistent Volume, we can request storage through a PVC to request storage and later use that PVC to attach it as a volume to containers in pods. For this purpose, let’s create a new project to manage this persistent storage:

oc new-project persistent-storage

Now let’s a MySQL app, which contains a reference to our Persistent Volume Claim:

apiVersion: v1

kind: Service

metadata:

name: mysql

spec:

ports:

- port: 3306

selector:

app: mysql

clusterIP: None

---

apiVersion: apps/v1 # for versions before 1.9.0 use apps/v1beta2

kind: Deployment

metadata:

name: mysql

spec:

selector:

matchLabels:

app: mysql

strategy:

type: Recreate

template:

metadata:

labels:

app: mysql

spec:

containers:

- image: mysql:5.6

name: mysql

env:

# Use secret in real usage

- name: MYSQL_ROOT_PASSWORD

value: password

ports:

- containerPort: 3306

name: mysql

volumeMounts:

- name: mysql-persistent-storage

mountPath: /var/lib/mysql

volumes:

- name: mysql-persistent-storage

persistentVolumeClaim:

claimName: mysql-pv-claim

Create the app using the ‘oc command’:

oc create -f mysql-deployment.yaml

This will automatically deploy MySQL container in a Pod and place database files on the persistent storage.

$ oc get pods NAME READY STATUS RESTARTS AGE mysql-1-kpgjb 1/1 Running 0 13m mysql-2-deploy 1/1 Running 0 2m mysql-2-z6rhf 0/1 Pending 0 2m

Let’s check that the Persistent Volume Claim has been bound correctly:

$ oc describe pvc mysql-pv-claim

Name: mysql-pv-claim

Namespace: default

StorageClass:

Status: Bound

Volume: mysql-pv-volume

Labels: <none>

Annotations: pv.kubernetes.io/bind-completed=yes

pv.kubernetes.io/bound-by-controller=yes

Capacity: 10Gi

Access Modes: RWO

Events: <none>

Done! now let’s connect to the MySQL Pod using the mysql tool and add a new Database:

$ oc rsh mysql-1-kpgjb sh-4.2$ mysql -u root -p Enter password: Welcome to the MySQL monitor. Commands end with ; or \g.

Let’s add a new Database named “sample”:

MySQLDB [(none)]> create database sample; Query OK, 1 row affected (0.00 sec) MySQLDB [(none)]> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | mysql | | openshift | | performance_schema | | sample | | test | +--------------------+ 6 rows in set (0.00 sec) MySQL [(none)]> exit

As proof of concept, we will kill the Pod where MySQL is running so that it will be automatically restarted:

$ oc delete pod mysql-1-kpgjb pod "mysqldb-1-kpgjb" deleted

In a few seconds the Pod will restart:

$ oc get pods NAME READY STATUS RESTARTS AGE mysql-1-5jmc5 1/1 Running 0 27s mysql-2-deploy 1/1 Running 0 3m mysql-2-z6rhf 0/1 Pending 0 3m

Let’s connect again to the Database and check that the “sample” DB is still there:

$ oc rsh mysql-1-5jmc5 sh-4.2$ mysql -u root -p Enter password: Welcome to the MySQLDB monitor. Commands end with ; or \g. Your MySQLDB connection id is 9 Server version: 10.2.8-MySQLDB MySQL Server Copyright (c) 2000, 2017, Oracle, MySQL Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MySQL [(none)]> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | mysql | | openshift | | performance_schema | | sample | +--------------------+

Great! As you can see, the Persistent Volume Claim used by the app, made the changes to the Database persistent!

Found the article helpful? if so please follow us on Socials