This article will guide you in troubleshooting Java application by analysing a Thread dump with the instruments available in the JDK. We will also learn some tools to simplify our analysis.

Java has mechanisms for analyzing the state of all threads of an application at a given time: Thread dumps. A thread dump is a list of all running JVM threads at a certain time. The dump shows for each Thread its Stack trace plus some additional information such as the Thread status, priority, cpu usage and if a monitor is associated to that Thread.

Capturing a Thread Dump

Firstly, let’s learn how to capture a dump: there are several options to do that: on Linux/Unix machines, you can send the SIGQUIT signal to the JVM Process. That will cause the JVM to emit a Thread Dump:

kill -3 <PID>

By default, the output of the “kill -3” command is on the server console. You can choose to redirect this output to a file by adding these JVM start up options:

-XX:+UnlockDiagnosticVMOptions -XX:+LogVMOutput -XX:LogFile=jvm.log

Besides the kill command, there are two tools which are available in any JDK installation that are more practical and can redirect to a file without any start up option. The first one is the jstack command which requires as argument the Java Process PID:

jstack -l <PID > jstack.out

Much the same way, you can use jcmd which is also in your JDK default tookit:

jcmd <PID> Thread.print

Finally, a tip for Windows users. If the JVM was started from a command prompt or console, the thread dump can be triggered by pressing CTRL+Break in the console window where the JVM is running.

Inside the Thread Dump

Before we dig into a Thread Dump, we need a brief recap on the status of a Java Thread:

Once you have started a Thread, it can enter one of the following status:

- Runnable: In this state, a thread might actually be running or it might be ready to run at any instant of time. It is the responsibility of the thread scheduler to give the thread, time to run.

- Timed Waiting: A thread enters this state when there is a conditional wait. For example a thread sleep or when it’s running an operation which has a timeout

- Blocked/Waiting state: When a thread is temporarily inactive, then it’s in one of the following states:

- Blocked: The BLOCKED thread is waiting for other thread to release the lock. You cannot interrupt a BLOCKED thread.

- Waiting: The WAITING thread is waiting for notification from other threads. The WAITING thread can be interrupted.

Next, let’s look at a Thread Dump example:

"Thread-5 (org.apache.activemq.artemis.core.remoting.impl.invm.InVMConnector)" #272 prio=5 os_prio=0 cpu=0.83ms elapsed=7.12s tid=0x0000000007d97800 nid=0x1015 waiting on condition [0x00007f1913559000] java.lang.Thread.State: TIMED_WAITING (parking) at jdk.internal.misc.Unsafe.park(java.base@11/Native Method) - parking to wait for <0x00000000e318fb48> (a java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject) at java.util.concurrent.locks.LockSupport.parkNanos(java.base@11/LockSupport.java:234) at java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.awaitNanos(java.base@11/AbstractQueuedSynchronizer.java:2123) at java.util.concurrent.LinkedBlockingQueue.poll(java.base@11/LinkedBlockingQueue.java:458) at org.apache.activemq.artemis.utils.ActiveMQThreadPoolExecutor$ThreadPoolQueue.poll(ActiveMQThreadPoolExecutor.java:112) at org.apache.activemq.artemis.utils.ActiveMQThreadPoolExecutor$ThreadPoolQueue.poll(ActiveMQThreadPoolExecutor.java:45) at java.util.concurrent.ThreadPoolExecutor.getTask(java.base@11/ThreadPoolExecutor.java:1053) at java.util.concurrent.ThreadPoolExecutor.runWorker(java.base@11/ThreadPoolExecutor.java:1114) at java.util.concurrent.ThreadPoolExecutor$Worker.run(java.base@11/ThreadPoolExecutor.java:628) at org.apache.activemq.artemis.utils.ActiveMQThreadFactory$1.run(ActiveMQThreadFactory.java:118) Locked ownable synchronizers: - None

The above is a Thread from an application server which uses an embedded JMS Server. Focus on the last library class in the stack trace: the Class ActiveMQThreadPoolExecutor.java, You can check the source code of ActiveMQThreadPoolExecutor.java from GitHub

@Override

public Runnable poll(long arg0, TimeUnit arg2) throws InterruptedException {

// Increment the delta as a thread becomes idle

// by waiting for a task to poll from the queue

DELTA_UPDATER.incrementAndGet(this);

Runnable runnable = null;

try {

runnable = super.poll(arg0, arg2);

} finally {

// Now the thread is no longer idle waiting for a task

// If it had taken a task, the delta remains the same

// (decremented by the thread and incremented by the taken task)

if (runnable == null) {

DELTA_UPDATER.decrementAndGet(this);

}

}

return runnable;

}

}

Basically, the above code is polling for some data. There’s a counter to check how long the Thread has been idle.

Finally, it is important to note that individual threads sitting in a TIME_WAITING state don’t affect CPU. In the next section let’s see an example of a Thread affecting the CPU.

Threads and CPU usage

The CPU load is proportional to the number of active processes per unit time which are in running states and uninterruptible states (such as I/O lock and kernel lock.). Let’s see this with an example:

"App-Thread1" #1 prio=5 os_prio=0 cpu=22484.99ms elapsed=77.44s tid=0x00007fcbfc00f800 nid=0x16a4 runnable [0x00007fcc01437000] java.lang.Thread.State: RUNNABLE at java.io.FileOutputStream.writeBytes(java.base@11/Native Method) at java.io.FileOutputStream.write(java.base@11/FileOutputStream.java:354) at java.io.BufferedOutputStream.flushBuffer(java.base@11/BufferedOutputStream.java:81) at java.io.BufferedOutputStream.flush(java.base@11/BufferedOutputStream.java:142) - locked <0x0000000430062c00> (a java.io.BufferedOutputStream) at java.io.PrintStream.write(java.base@11/PrintStream.java:561) - locked <0x0000000430062bd8> (a java.io.PrintStream) at sun.nio.cs.StreamEncoder.writeBytes(java.base@11/StreamEncoder.java:233) at sun.nio.cs.StreamEncoder.implFlushBuffer(java.base@11/StreamEncoder.java:312) at sun.nio.cs.StreamEncoder.flushBuffer(java.base@11/StreamEncoder.java:104) - locked <0x000000043007a7d0> (a java.io.OutputStreamWriter) at java.io.OutputStreamWriter.flushBuffer(java.base@11/OutputStreamWriter.java:184) at java.io.PrintStream.write(java.base@11/PrintStream.java:606) - eliminated <0x0000000430062bd8> (a java.io.PrintStream) at java.io.PrintStream.print(java.base@11/PrintStream.java:745) at java.io.PrintStream.println(java.base@11/PrintStream.java:882) - locked <0x0000000430062bd8> (a java.io.PrintStream) at acme.com.Connector.connect(Connector.java:104) at acme.com.Connector.init(Connector.java:54)

This Thread dump tells us a different story. The Thread “App-Thread1” is in RUNNABLE state and it’s overusing the CPU (cpu=22484.99ms) by writing streams of data. The method connect from the acme.com.Connector class is actively writing and flushing on the filesystem.

Rule of Thumb #1 – check for application packages in the Thread dump

Look for your own application classes in the Thread Dump. Check the Thread status and CPU consumption of these Threads.

If there are no evident flaws in your code, follow the flow in the Thread dump to see where the call lands.

If the stack points to a Java library check for known issues for that Class. On the other hand, if the stack points to Java internals/native execution then you should check JVM or your OS Settings.

Rule of Thumb #2 – Use Java 11+ to display CPU usage of each Thread

If you are using JDK 11+ you will see a crucial information in the Thread dump: the CPU usage of the Thread.

If you are on a lower version of Java, follow this procedure: with the ‘top’ command find the java Threads id which are consuming most of the CPU. Transform the PID in Hex format with:

$ printf 0x%x 10640 0x2c4c

Then, in your Thread dump look for the Thread with nid=0x2c4c . You can learn more about this topic here: Finding which Java threads are consuming your CPU

Threads and Poor Performance

The following example will cover another use case. Overall, your CPU/Memory is under control however the application is hanging or performing badly. Let’s look at this stack trace:

"ajp-j2ee.instance.jirastudio.com-jira%2F127.0.0.104-8009-1" daemon prio=10 tid=0x0ab53400 nid=0x3805 in Object.wait() [0x714c0000]

java.lang.Thread.State: WAITING (on object monitor)

at java.lang.Object.wait(Native Method)

at org.apache.commons.httpclient.MultiThreadedHttpConnectionManager.doGetConnection(MultiThreadedHttpConnectionManager.java:509)

- locked <0x8d0e0df8> (a org.apache.commons.httpclient.MultiThreadedHttpConnectionManager$ConnectionPool)

at org.apache.commons.httpclient.MultiThreadedHttpConnectionManager.getConnectionWithTimeout(MultiThreadedHttpConnectionManager.java:394)

at org.apache.commons.httpclient.HttpMethodDirector.executeMethod(HttpMethodDirector.java:152)

at org.apache.commons.httpclient.HttpClient.executeMethod(HttpClient.java:396)

at org.apache.commons.httpclient.HttpClient.executeMethod(HttpClient.java:324)

at com.atlassian.crowd.integration.rest.service.RestExecutor$MethodExecutor.executeCrowdServiceMethod(RestExecutor.java:443)

at com.atlassian.crowd.integration.rest.service.RestExecutor$MethodExecutor.andCheckResponse(RestExecutor.java:369)

at com.atlassian.crowd.integration.rest.service.RestCrowdClient.invalidateSSOToken(RestCrowdClient.java:854)

at com.atlassian.crowd.integration.http.CrowdHttpAuthenticatorImpl.logout(CrowdHttpAuthenticatorImpl.java:175)

at com.atlassian.crowd.integration.http.CacheAwareCrowdHttpAuthenticator.logout(CacheAwareCrowdHttpAuthenticator.java:78)

at com.atlassian.crowd.integration.seraph.v22.CrowdAuthenticator.logout(CrowdAuthenticator.java:145)

at com.atlassian.crowd.integration.seraph.v22.CrowdAuthenticator.login(CrowdAuthenticator.java:110)

at com.atlassian.seraph.filter.PasswordBasedLoginFilter.runAuthentication(PasswordBasedLoginFilter.java:127)

at com.atlassian.seraph.filter.PasswordBasedLoginFilter.login(PasswordBasedLoginFilter.java:72)

This stack trace is from an actual issue with Apache HttpClient due to of Connections. In practice, all components of a System have some constraints on the usage of resources. Each resource (database connection, thread executor etc.) is generally maintained in a pool or so. Once that the number of requests exceed those available, the threads will be in WAITING or BLOCKED state.

Rule of Thumb #3 – Check constraints for WAITING/BLOCKED Threads

Look out for Threads in WAITING or BLOCKED state. Find which configuration setting might cause your Thread to be in this state. Check all the max-threads / max-connections limits that you have. Also note, the limit might be on external systems as well: for example the Database System Global Area (SGA) or the number of cursors available.

With regards to WildFly connections, check here: JBoss run out of Connections ? here is how to fix it

Rule of Thumb #4 – Look which Thread is holding the lock

Check which Thread is owning the lock for a Thread. If two Threads are waiting each other lock you are in a deadlock:

"Thread-0":

waiting to lock monitor 0x000000002334a280 (object 0x00000000c7a49f40,

a java.lang.Object),

which is held by "Thread-1"

"Thread-1":

waiting to lock monitor 0x000000002334a480 (object 0x00000000c7a49f30,

a java.lang.Object),

which is held by "Thread-0"

Please note: the default deadlock detection works with locks that are obtained using the synchronized keyword and through the java.util.concurrent package. If the Java VM flag -XX:+PrintConcurrentLocks is set, then the stack trace also shows a list of lock owners.

Tools you can use to analyse Thread Dumps

An application can run hundreds of Threads. For this reason, you need a tool which is able to group them by category end let you spot which are the most common Stack Traces in a dump. There are several tools you can use including online tools and others you can download and even install on your IDE. Let’s see a selection of them.

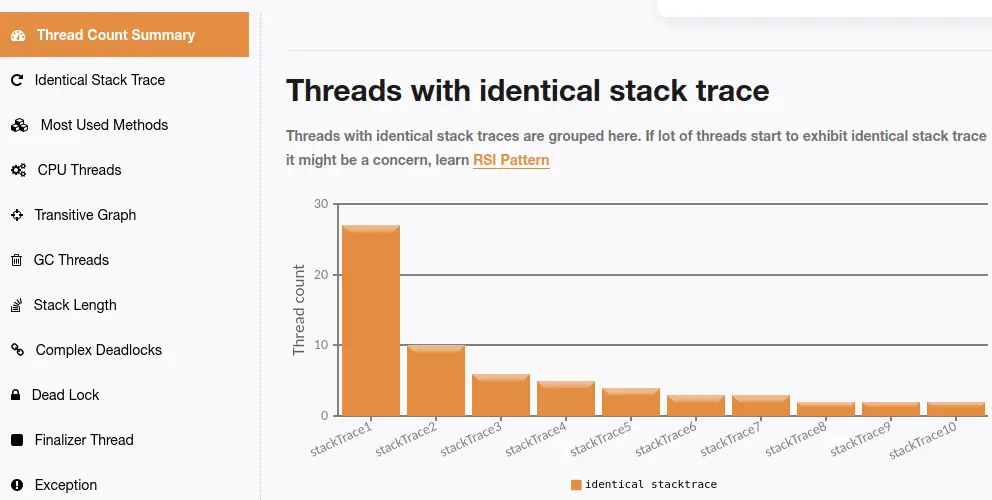

Rule of Thumb #5 – Pay attention to the Threads which have the same Stack Trace

Whatever is the tool you use, focus at first on threads which have an identical Stack Trace.

Check if the application logic requires to have a large number of Threads RUNNING or WAITING in that state.

Also, compare Thread dumps at different times to see if some Thread groups increase over time.

Tool 1: Fast Thread online Tool

FastThread is one of the best tool to analyse a Thread Dump. You can reach it at: https://fastthread.io/ The free plan allows to use 5 uploads each month. After uploading the Dump, an Intelligence Report will be available:

Focus on the following aspects:

- Threads with identical stack trace: This section groups a list of Threads with the same Stack Trace. This is indicative of resource contention on external resources such as RDBMS or Thread Pools. That is the first clue that you need to analyze when you are looking for the root cause.

- Most used methods: If you take multiple Thread dumps in sequence, you can get an idea of which methods are the most used in your Java application.

- CPU consuming threads: FastThread is particularly smart in spotting Threads which can be responsible for high CPU consumption.

- Blocking threads: Blocking threads can potentially make your application unresponsive. You can get them listed in a separate section. If Blocked threads are causing a Deadlock, it will be reported.

- Exceptions: Thread dumps that contain exceptions in the Stack Trace are also grouped in a section so that you can do further investigations.

Tool 2: JStack

JStack is a free tool available at: https://jstack.review/

Once you upload a dump, you will be able to:

- Group your Threads by Status

- Find Threads with identical Stack Trace

- Compare Thread dumps by uploading multiple dump files

Tool 3: Thread Dump Analyzer (TDA)

The TDA Thread Dump Analyzer for Java is a compact Swing graphical user interface (GUI) that you can use to analyze sets of Thread Dumps. It provides statistical insights into the detected thread dumps, offers details concerning locked monitors and waiting threads. The tool can also perform thread comparisons to identify commonalities and includes a feature for detecting long-running threads.

One simple way to run the TDA tool is to launch its jBang alias. Have jBang available on your machine and just run:

jbang tda@maxandersen/tda

YATDA

YATDA (Yet another thread dump analyzer) is a shell script which can help you to detect common concerns for JBoss or Tomcat Web servers.You can download it from https://github.com/aogburn/yatda

Yatda collects and sorts the tops of thread stacks to see what threads are most commonly doing. It provides specific usage and stats of JBoss request threads and boot threads and can be specified to look for some other custom thread name if there is some other thread of interest. You can run the script passing as argument (-f) the dump name:

./yatda.sh -f mydump.txt

Within the generated report, you will see

- Specific findings: for example if the number of current request threads is a good fit with the default Thread pool size.

- Top lines of request threads

- Most common from first 20 lines of request threads

- Top lines of all threads

- Most common from first 10 lines of all threads

A single Thread dump is not enough. In most cases, you will need to take multiple times a Thread dump to confirm your hypothesis. Let’s see how to capture them.

Capturing dumps at regular intervals

A common best practice is to collect a set of dumps at regular intervals. For example every 10 seconds.

This is a basic shell script that allows you to collect your dumps:

#!/bin/sh # Generate N thread dumps of the process PID with an INTERVAL between each dump. if [ $# -ne 3 ]; then echo Generates Java thread dumps using the jstack command. echo echo usage: $0 process_id repetitions interval exit 1 fi PID=$1 N=$2 INTERVAL=$3 for ((i=1;i<=$N;i++)) do d=$(date +%Y%m%d-%H%M%S) dump="./threaddump/threaddump-$PID-$d.txt" echo $i of $N: $dump jstack -l $PID > $dump sleep $INTERVAL done

To take 10 Thread Dumps with an interval of 10 seconds:

./threaddump-script.sh <pid> 10 10s

Capturing a Thread Dump of a Java application in a container

Finally, we will show how you can capture a Thread dump from a Java application running in a Container.

Firstly, you need to fetch the ContainerId of your application running in a Container:

$ docker ps CONTAINER ID IMAGE 948303c4e2ab quay.io/wildfly/wildfly-centos7

Next, store the PID of your Java Process in a variable:

PID=$(docker exec 948303c4e2ab jps | grep -v Jps | awk '{print $1}')

The above command uses the ‘jps’ utility which is available in the bin folder of Java. We are storing the PID of the Java process in PID.

The rest is pretty simple, use the jstack command on the remote machine and the output will be printed in your host console:

docker exec 948303c4e2ab jstack -l $PIDFound the article helpful? if so please follow us on Socials